ELI5 GenAI Day 02 - 什麼是 GuardRails 與 Responsible AI

### ELI5 GenAI Day 02 - 什麼是 GuardRails 與 Responsible AI

目前在生成式 AI 領域中,Responsibility 與 GuardRails 是兩個重要的議題。Responsibility 指的是 AI 模型的使用者,開發者,以及相關利害關係人,都需要對 AI 模型的使用負責。GuardRails 則是指在 AI 模型的訓練,部署,以及使用過程中,需要設定一些限制,以避免 AI 模型產生不當的結果。

resource: https://www.credo.ai/news/introducing-genai-guardrails-your-control-center-for-safe-responsible-adoption-of-generative-ai

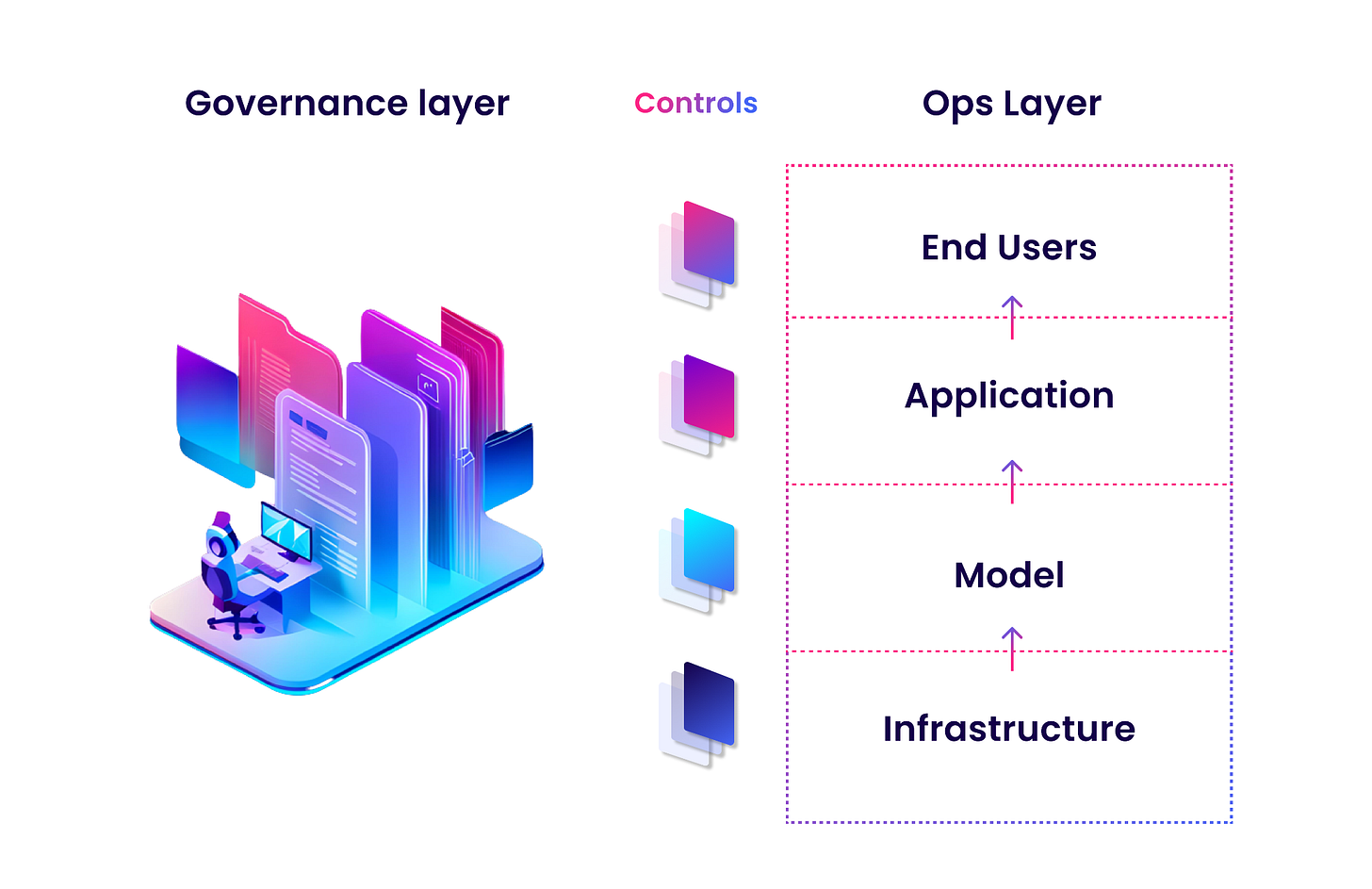

簡單來說,當應用程式結合了 AI 模型時,分為使用者,應用程式,模型本身,以及基礎建設。這四個部分在 Responsibility 都需要有「負責」的概念。而 GuardRails 則是在這四個部分中,設定一些限制,以避免 AI 模型產生不當的結果。

GuardRails 的設定可以分為三個部分:

1. **訓練階段**: 在訓練 AI 模型時,需要設定一些限制,以避免 AI 模型產生不當的結果。例如: 訓練資料的品質,訓練過程的監控,以及訓練過程的透明度。

2. **部署階段**: 在部署 AI 模型時,需要設定一些限制,以避免 AI 模型產生不當的結果。例如: 部署環境的安全性,部署過程的監控,以及部署過程的透明度。

3. **使用階段**: 在使用 AI 模型時,需要設定一些限制,以避免 AI 模型產生不當的結果。例如: 使用者的權限控制,使用過程的監控,以及使用過程的透明度。

GuardRails 有一個範例就是 NeMo-GuardRails,這是一個針對 NeMo 框架的 GuardRails 工具。NeMo 是 NVIDIA 的一個開源語音和語言處理 AI 框架,NeMo-GuardRails 提供了一個用於訓練,部署,以及使用 NeMo 模型的 GuardRails 工具。

而在各大型語言模型的服務商來說,也提供了一些 GuardRails 的服務。例如: OpenAI 有一個[範例] 來說明如何使用 GuardRails 來保護 GPT 模型。Google Vertex AI 也有結合 NeMo-GuardRails 的[範例] 而 Gemini 本身也有一些基本的 [Safety settings],雖然是蠻基本的。

而在接下來的小型語言模型,也會有對應的 GuardRails 服務。我們接著會介紹一下 Gemma 的 ShieldGemma。

### 本篇同步發表於 [iThome] 與 [個人電子報](https://memo.jimmyliao.net/) -> 訂閱訂起來

## English Version

In the field of generative AI, Responsibility and GuardRails are two important issues. Responsibility refers to the responsibility of the users, developers, and other stakeholders to the AI model. GuardRails refers to the need to set some restrictions during the training, deployment, and use of the AI model to avoid inappropriate results.

In simple terms, when an application combines an AI model, it is divided into users, applications, the model itself, and the infrastructure. All four parts need to have the concept of "responsibility" in Responsibility. GuardRails is to set some restrictions in these four parts to avoid inappropriate results from the AI model.

The settings of GuardRails can be divided into three parts:

1. **Training Phase**: When training an AI model, some restrictions need to be set to avoid inappropriate results. For example: the quality of training data, monitoring of the training process, and transparency of the training process.

2. **Deployment Phase**: When deploying an AI model, some restrictions need to be set to avoid inappropriate results. For example: the security of the deployment environment, monitoring of the deployment process, and transparency of the deployment process.

3. **Use Phase**: When using an AI model, some restrictions need to be set to avoid inappropriate results. For example: user permission control, monitoring of the use process, and transparency of the use process.

One example of GuardRails is NeMo-GuardRails, which is a GuardRails tool for the NeMo framework. NeMo is an open-source speech and language processing AI framework from NVIDIA. NeMo-GuardRails provides a GuardRails tool for training, deploying, and using NeMo models.

For major language model service providers, they also provide some GuardRails services. For example, OpenAI has an [example] to demonstrate how to use GuardRails to protect the GPT model. Google Vertex AI also has an example that combines NeMo-GuardRails. Gemini itself also has some basic [Safety settings], although they are quite basic.

For the upcoming small language models, there will also be corresponding GuardRails services. Next, we will introduce Gemma's ShieldGemma.

This post is simultaneously published on [iThome] and my personal [newsletter](https://memo.jimmyliao.net/) -> Subscribe now!